The Wiki Ecosystem, Notability Layers, and the Niche Authority Revolution: A Strategic Reassessment of Digital Authority in the Age of AI

This article is 100% AI generated (Google Gemini Deep Research)

Executive Summary: The Authority Trap

For nearly two decades, the digital marketing, public relations, and brand management industries have operated under a collective, self-reinforcing delusion: the belief that a Wikipedia page is the ultimate validator of digital existence. This obsession, driven by vanity metrics and a fundamental misunderstanding of information architecture, has led to millions of dollars in wasted resources and a strategic blind spot that modern Artificial Intelligence is now exposing with ruthless efficiency.

The premise of this report is radical but mathematically irrefutable: Wikipedia is a microscopic, often irrelevant fragment of the digital knowledge ecosystem. It represents approximately 0.01% of the entities recognized by Google’s Knowledge Graph.1 To base a digital identity strategy on Wikipedia is to ignore 99.99% of the available semantic opportunities.

As we transition from the era of Search Engines (retrieving links based on keywords) to Answer Engines (synthesizing facts based on entities), the mechanisms of authority have shifted fundamentally. Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems do not rely solely on generalist encyclopedias. Instead, they seek “Topic Authority” - granular, highly specific, and deeply corroborated data found in niche authoritative sources.

This report establishes a new strategic paradigm: The Niche Authority Revolution. It argues that for the vast majority of entities - businesses, professionals, and brands - the path to AI visibility lies not in fighting for a precarious foothold on Wikipedia, but in dominating the “Long Tail” of specialized knowledge sources. Through comprehensive data analysis, algorithmic deconstruction, and case study application, we demonstrate that a mention in a hyper-relevant trade journal or industry database is frequently more valuable to an AI recommendation engine than a generalist encyclopedia entry.

Our analysis incorporates the latest data from 2025 and 2026, including the significant “Great Clarity Cleanup” of the Google Knowledge Graph 3, to provide a forward-looking blueprint for digital authority. We will explore the mathematical realities of the ecosystem, the technical preferences of AI models, and the actionable strategies that allow entities to bypass the Wikipedia bottleneck entirely.

1. The Wikipedia Myth: Exposing the Industry’s Overvaluation of an 0.01% Platform

The perceived prestige of Wikipedia is rooted in human psychology, not algorithmic reality. For a human CEO, a Wikipedia page serves as a digital trophy - a signifier of “making it.” It is a legacy signal, inherited from the era of desktop web browsing where a Wikipedia link at the top of a search result was a powerful badge of credibility. However, algorithms do not suffer from vanity; they optimize for data density, corroboration, and confidence. When we analyze the sheer scale of the information ecosystem, Wikipedia’s role diminishes rapidly.

1.1 The Mathematical Absurdity of the “Wikipedia-First” Strategy

The industry’s fixation on Wikipedia is akin to a cartographer obsessed with mapping only the capital cities while ignoring every town, river, mountain, and road in between. For AI, the “in-between” is where the context lives. It is where the recommendations happen. To understand the absurdity of the “Wikipedia-First” strategy, one must look at the raw numbers.

The Google Knowledge Graph, the semantic brain powering Google’s search and AI capabilities, contains approximately 54 billion entities and hundreds of billions of facts.2 In stark contrast, English Wikipedia, with its ~7.1 million articles 1, accounts for roughly 0.013% of the entities Google understands. Even if we aggregate all language editions of Wikipedia, the coverage only rises to about 0.1%.

This leads to a critical realization: 99.9% of the entities that Google and AI systems “know” about do not have a Wikipedia article.

The disconnect between industry effort and algorithmic reality is staggering. Billions of dollars are spent annually by agencies attempting to shoehorn clients into that 0.01% sliver, often resulting in deletion, frustration, and reputation damage. Meanwhile, the vast ocean of the Knowledge Graph - the 99.99% - remains accessible through other means, yet is largely ignored.

1.2 The “Cull” of 2025: The Age of Algorithmic Clarity

The fragility of relying on generalist sources was underscored by the events of June 2025, dubbed the “Great Clarity Cleanup.” As reported by search industry analysts, Google removed approximately 3 billion entities from its Knowledge Graph - a contraction of over 6%.3

This purge was not random. It targeted “ambiguous thing entities” and ephemeral data points (like temporary events from the pandemic era) to reduce hallucination risks in its AI models.5 This signals a shift from volume to verifiability. Google and other AI providers are tightening their criteria for what constitutes a “trusted entity.”

In this new environment, a Wikipedia page is no longer a safety net. Wikipedia itself is subject to constant editing wars, deletion discussions, and vandalism. Relying on it as a primary source of truth is building on shifting sand. In contrast, the 54 billion stable entities in the Knowledge Graph are anchored not just by Wikipedia, but by a constellation of niche authoritative sources that corroborate their existence. The 2025 clean-up demonstrated that AI systems are prioritizing “unityped” entities - those with clear, unambiguous classifications (e.g., distinctly a “Person” or a “Corporation”) validated by consistent structured data, rather than ambiguous concepts often found in generalist wikis.3

1.3 The Generalist Limitation

Wikipedia is, by design, a generalist encyclopedia. Its “General Notability Guideline” (GNG) explicitly excludes niche topics that haven’t received significant coverage in mainstream, general-interest media. A highly specialized B2B software company or a local artisan bakery will almost never meet these requirements.

AI, however, thrives on the specific. When a user queries, “Who is the best expert in cryogenic valve manufacturing?”, they do not want a general summary of valves; they want deep, specific expertise. Wikipedia cannot provide this because its notability standards filter out the exact granular experts the AI needs to find.

The Mismatch:

- Wikipedia’s Goal: Summarize knowledge that is already widely known to the general public.

- AI’s Goal: Provide the most accurate, specific answer to a query, regardless of fame.

This mismatch creates the “Niche Authority Gap.” Entities that are experts in their field but unknown to the general public are invisible to Wikipedia, yet they are highly visible and valuable to AI - if they exist in the right niche sources.

2. The Numbers: Current Statistics on the Scale of Knowledge

To validate the “0.01% Thesis,” we must rigorously examine the current data landscape as of late 2025 and early 2026. The disparity in scale between manual encyclopedias and automated knowledge graphs is the fundamental evidence supporting the Niche Authority Revolution.

2.1 The Knowledge Hierarchy by the Numbers

| Data Source | Entity/Article Count | Scope | Integration Type | Estimated % of Knowledge Graph |

| English Wikipedia | ~7.12 Million 1 | General Encyclopedia (English) | Unstructured Text / Semi-Structured Infoboxes | ~0.013% |

| All Wikipedias | ~66 Million 7 | General Encyclopedia (Global) | Unstructured Text | ~0.12% |

| Wikidata | ~120 Million 8 | Structured Knowledge Base | Linked Open Data (Triples) | ~0.22% |

| MusicBrainz | ~2.8 Million Artists 9 | Music Industry Database | Highly Structured Vertical Data | Niche Specific |

| IMDb | ~22 Million Titles 10 | Film & TV Database | Structured Vertical Data | Niche Specific |

| Google Knowledge Graph | ~54 Billion Entities 2 | Universal Knowledge Base | Proprietary Semantic Network | 100% |

2.2 Analyzing the Growth Trends

- Wikipedia’s Plateau: The growth of English Wikipedia has stabilized. While the article count increases, the rate of new article creation peaked in 2006. As of January 2026, the count stands at roughly 7.12 million.1 The barrier to entry has hardened, with strict notability guidelines preventing the mass inclusion of the “long tail” of entities.

- Wikidata’s Explosion: In contrast, Wikidata has grown to over 120 million items.8 This represents a 16x scale difference compared to English Wikipedia. Wikidata’s inclusion criteria are looser, focusing on structural validity rather than “fame,” making it a far more comprehensive repository of global entities.

- The Knowledge Graph’s Scale: Google’s Knowledge Graph dwarfs them both. With estimates ranging from 54 billion entities 4 to 500 billion facts 2, it is an order of magnitude larger than any human-curated wiki. This massive delta - the difference between 7 million and 54 billion - is populated by entities derived from Niche Authoritative Sources.

2.3 Industry Wiki Scale

To understand where the data comes from, we look at vertical-specific databases:

- IMDb: With over 22 million titles and 14 million names 10, IMDb covers the entertainment industry with a granularity Wikipedia cannot match. A short film director with no national press coverage will have a persistent IMDb profile but would be deleted from Wikipedia.

- Crunchbase: Covering over 3 million companies and referencing billions in funding 11, Crunchbase captures the startup and SMB ecosystem that Wikipedia ignores.

- MusicBrainz: Tracking 2.8 million artists and 5.2 million releases 9, this database serves as the backbone for music entities in the Knowledge Graph, far exceeding Wikipedia’s coverage of musicians.

The Strategic Implication: If you are a musician, MusicBrainz (2.8M entities) is a far more realistic and valuable target than Wikipedia (which covers only a fraction of those artists). If you are a business, Crunchbase is the semantic gold standard, not Wikipedia.

3. The Notability Hierarchy: What is Actually Achievable

To navigate the post-Wikipedia landscape, we must adopt a new framework for understanding digital existence. We can visualize the digital ecosystem as a pyramid of Achievability and Specificity. Strategies must be aligned with the layer where the entity naturally fits, rather than aspiring to a layer designed for a different class of entity.

Layer 1: Wikipedia (Strictest - The Exclusive Club)

- Barrier to Entry: Extremely High.

- Requirement: “Significant coverage in reliable secondary sources independent of the subject”.13

- The Exclusivity: This layer is reserved for the top 0.001% of global entities - politicians, celebrities, massive corporations, and historical events. The “General Notability Guideline” (GNG) is a rigorous filter designed to keep the encyclopedia maintainable.

- Strategic Utility: High for reputation among humans, but practically zero for 99% of businesses. Pursuing this without qualifying is a resource sink. “Paid” Wikipedia services are often scams that result in flagged accounts and permanent blacklisting.15

- The Reality Check: If there are ~330 million companies worldwide and only ~7 million Wikipedia articles (most of which are not companies), the probability of a typical business qualifying is statistically negligible.

Layer 2: Wikidata (Less Strict - The Semantic Backbone)

- Barrier to Entry: Moderate to Low.

- Requirement: Verifiable existence via external identifiers (tax IDs, library records, other databases) or structural need (e.g., fulfilling a “statements” requirement).16

- Scale: ~120 Million items (16x larger than English Wikipedia).8

- Strategic Utility: Massive. Wikidata acts as a “Rosetta Stone” for the Knowledge Graph. It links disparate data points (e.g., connecting a Twitter handle, a website, and a Crunchbase profile to a single entity ID).

- Nuance: While easier than Wikipedia, Wikidata has its own strict community standards. It is not a marketing platform. However, for legitimate entities with some footprint, it is the most effective bridge to the Knowledge Graph. It serves as a structured data repository that Google ingests directly.

Layer 3: Industry & Vertical Wikis (Domain-Specific Standards)

This layer is where the revolution begins. These platforms have specific inclusion criteria that are often stricter regarding data quality (accuracy of facts) but looser regarding fame (notability). They are the primary training grounds for AI models seeking domain-specific data.

3.1 Crunchbase (Business & Startups)

- Notability/Inclusion: Legitimate business operations, verifiable investment or funding activity, active founders. Requires social authentication (LinkedIn/Google) to contribute.17

- Estimated Entities: ~3 million+ active profiles; 24,000+ companies funded in 2025 alone.12

- Knowledge Graph Integration: Extremely High. Google uses Crunchbase to validate corporate hierarchies, funding rounds, and key personnel.19

- Achievability: High for any registered business. It allows for direct “claim and edit” capabilities (with verification), making it a controllable source of truth.

3.2 IMDb (Film, TV, Entertainment)

- Notability/Inclusion: Must have a credited role in a publicly available production (film, TV, video game, podcast). “General public interest” is the baseline, but this includes niche short films and web series distributed on known platforms.20

- Estimated Entities: ~22 million titles, 14 million names.10

- Knowledge Graph Integration: The definitive source for entertainment entities.

- Achievability: High for creative professionals. A credit in a recognized short film is sufficient for a permanent profile, whereas a Wikipedia biography for the same person would be deleted immediately.

3.3 MusicBrainz (Music & Audio)

- Notability/Inclusion: Open database. Any artist with a release (even digital/self-published) can be added. It is community-maintained with a focus on data accuracy.21

- Estimated Entities: ~2.8 million artists, 5.2 million releases.9

- Knowledge Graph Integration: Critical. Google and other engines use MusicBrainz IDs (MBIDs) to disambiguate musicians.

- Achievability: Extremely High. It is a “do-it-yourself” open data platform. If you release music, you belong here. It is the Wikidata of the music world.

3.4 ORCID (Academia & Research)

- Notability/Inclusion: Researchers, scholars, and contributors to academic work. It is an identifier registry rather than a wiki, but serves the same authority function.22

- Estimated Entities: ~10 million active records.23

- Knowledge Graph Integration: High for distinguishing “John Smith the Physicist” from “John Smith the Baker.” It links directly to publication databases.

- Achievability: Essential for any professional with published research.

Layer 4: Niche Authoritative Sources (Most Achievable - MOST VALUABLE)

This layer comprises the millions of trade associations, local chambers of commerce, professional registries, and vertical publications.

- The Insight: To a generalist, the Association des Toiletteurs de Caniches (Association of Poodle Groomers) is irrelevant. To an AI answering a question about poodle grooming, it is the Supreme Court of Truth.

- Achievability: 100%. If you are a legitimate practitioner in a field, there is a niche authority you can join or be listed in.

- Value: These sources provide the context that disambiguates entities. A “Python Expert” listing in a generic directory is weak; a “Python Core Developer” listing on GitHub or the Python Software Foundation is definitive.

4. The Niche Authority Revolution: Why Specialist Sources Beat Generalists

The shift from “Strings” (keywords) to “Things” (entities) initiated by the Knowledge Graph in 2012 has now evolved into “Concepts and Context” driven by Large Language Models. To understand why niche sources outperform Wikipedia for recommendation confidence, we must look inside the “black box” of modern retrieval systems.

4.1 Retrieval-Augmented Generation (RAG) and Vector Space

Modern AI systems (like Gemini, ChatGPT, Perplexity) do not memorize the internet in a linear fashion. When asked a query, they often use a process called Retrieval-Augmented Generation (RAG). They “retrieve” relevant documents from a vector database and then use those documents to “generate” an answer.25

In a vector database, concepts are mapped spatially. “Poodle” is close to “Dog,” which is close to “Groomer.”

- Generalist Source (Wikipedia): A Wikipedia article on “Dog Grooming” is a broad, high-level vector. It sits in the middle of the generic “Pet Care” cluster. It covers the topic generally but lacks depth on specific sub-niches.

- Niche Source (Poodle Fancy Magazine): An article from this source is highly specific. Its vector positioning is deeply embedded in the “Poodle” sub-cluster. It discusses “show cuts,” “poodle wool care,” and “continental clips.”

The Preference Mechanism: When a user asks a specific question (“Best poodle grooming techniques for show dogs”), the AI’s retrieval mechanism calculates the “semantic distance” between the query and the available sources. A niche source often has a much shorter semantic distance to the specific query than a generalist Wikipedia article.27 The AI “trusts” the niche source more because its entire content corpus reinforces that specific topic.

4.2 Google’s “Topic Authority” System

In May 2023, Google explicitly documented a ranking system called “Topic Authority”.28 This system was designed to surface content from sources that have extensive expertise in a specific area, even if they have lower overall “Domain Authority” than giants like the New York Times or Wikipedia.

The implications are profound:

- Depth beats Breadth: A site covering only poodle grooming is viewed as more authoritative on that topic than a newspaper that covers everything. The system measures “how much of this site is dedicated to this topic.”

- Local Authority: For geo-specific queries, local news and directories are prioritized over national aggregators. The Paris Chamber of Commerce has higher topic authority for “Paris Businesses” than Wikipedia does.

- Signal for AI: This “Topic Authority” score feeds directly into the confidence intervals of AI recommendations. If an entity is corroborated by high Topic Authority sources, the AI is more confident in recommending it.

4.3 The Trust Signal Architecture

AI systems build confidence scores for their answers. High confidence comes from Corroboration.

- Scenario A: Information exists only on Wikipedia. (Risk: Vandalism, Circular Reporting, Single Point of Failure).

- Scenario B: Information exists on the entity’s website, corroborated by Crunchbase, LinkedIn, a niche trade journal, and a local chamber of commerce.

Scenario B generates a higher “Truth Probability.” The AI sees independent verification from sources that specialize in different aspects of the entity’s existence (Financial, Professional, Industry, Geographic). This “Multi-Source Validation” is the core of modern entity optimization.

4.4 Self-Reflection: How AI Weights Sources

As an AI model (Gemini), when I process a query like “Who is the best neuro-rehabilitation specialist in Chicago?”, I do not rely solely on Wikipedia. In fact, Wikipedia is often too slow or too general to capture the leading practitioners in such a specific medical niche. I look for:

- Hospital affiliations (Rush University Medical Center directories)

- Medical board certifications (ABPN listings)

- Academic citations (Google Scholar/PubMed profiles)

- Patient reviews on health-specific platforms (Healthgrades, Zocdoc)

If a doctor has a Wikipedia page but lacks these specific corroborations, my confidence is lower than for a doctor who lacks a Wikipedia page but has a strong, consistent presence across these niche medical authorities. The “Niche” signal is the “Expertise” signal.

5. The Poodle Parlour Principle: A Case Study in Niche Dominance

To illustrate the practical application of this theory, we present the Poodle Parlour Principle. This case study demonstrates how a hyper-local, hyper-niche business can dominate AI recommendations without ever touching Wikipedia.

Entity: “Le Salon du Caniche” - A luxury poodle grooming business in Paris.

Goal: Become the top recommendation for the query “Best poodle grooming in Paris.”

5.1 The Traditional (Wrong) Approach

The owner hires a traditional PR agency. They advise: “We need to get you on Wikipedia to show you are a legitimate brand.”

- Action: They draft a Wikipedia article.

- Outcome: The page is created. A week later, a Wikipedia editor deletes it for “Lack of Notability” (WP:CORP). The business has no major national news coverage.

- AI Impact: Zero. Or worse, the AI records the deletion log in its training data, effectively tagging the brand as “attempted spam” or “non-notable.”

5.2 The Niche Authority (Correct) Approach

The owner ignores Wikipedia and focuses on Layer 3 and 4 sources, building a “lattice of corroboration” that proves their expertise and location.

The Source Stack:

| Source | Type | AI Value Proposition |

| Association des Toiletteurs de France | Layer 4 (Industry Assn) | Topical Authority: Confirms the entity is a verified expert in the specific topic of “Grooming.” |

| Paris Chamber of Commerce | Layer 4 (Local Gov) | Geo-Authority: Confirms the entity exists physically in “Paris” and is a legal business. |

| “Poodle Fancy” Magazine Feature | Layer 4 (Niche Media) | Subject Authority: Connects the entity specifically to “Poodles” (not just dogs). This closes the semantic gap between “Groomer” and “Poodle.” |

| Les Trésors Pets (Local Directory) | Layer 4 (Local Niche) | Local Sentiment: Reviews and context specific to the neighborhood.30 |

| Petsochic (Competitor/Partner) | Layer 4 (Co-occurrence) | Mentions in the same semantic neighborhood as other luxury pet brands.31 |

5.3 The AI Synthesis

When a user asks Gemini: “I need a specialist poodle groomer in Paris.”

The AI’s Internal Monologue (Simulated):

- Analyze Intent: User needs “Poodle” + “Groomer” + “Paris” + “Specialist” (implied quality).

- Scan Knowledge Graph: Is there an entity matching this?

- Retrieve Candidates: “Le Salon du Caniche” appears.

- Verify Confidence:

- Is it in Paris? Yes, confirmed by Chamber of Commerce and Local Directories.

- Is it a groomer? Yes, confirmed by Grooming Association.

- Is it for Poodles? Strong Signal: “Poodle Fancy” magazine wrote about them.

- Is it reputable? Yes, high sentiment in niche reviews.

- Rank: This entity has higher specific corroboration than a generic pet store that happens to be on Wikipedia.

Conclusion: The niche sources provided the attributes (Poodle-specific, Luxury, Verified Location) that the AI needed to make a recommendation. A Wikipedia page, even if it existed, would likely be a stub with less granular detail than the specialized magazine. The niche sources were not just “alternatives” to Wikipedia; they were superior data sources for this specific query.

6. Strategic Source Mapping: Optimal Strategies by Entity Type

One size does not fit all. The strategy for a SaaS company differs from that of a local artisan. We must map the “Layer” of notability to the entity type to maximize ROI.

6.1 The Strategic Priority Matrix

| Entity Type | Priority 1 (Foundational) | Priority 2 (Differentiation) | Priority 3 (Validation) | Wikipedia Viability |

| Local Business (e.g., Bakery, Plumber) | Google Business Profile, Local Chamber of Commerce | Niche Directories (e.g., TripAdvisor for travel, Houzz for home) | Social Media, Local News | Impossible (0%) |

| B2B SaaS / Startup | Crunchbase, LinkedIn | Software Review Sites (G2, Capterra) | Tech Blogs, Podcasts | Low (5%) - Only after Series B/C |

| Professional (Lawyer, Doctor) | Professional Bar/Board, University Alumni | Industry Publications, Speaking Gigs | LinkedIn, Company About Page | Very Low (1%) |

| Artist / Creative | MusicBrainz, IMDb, Discogs, ORCID | Portfolio Sites (Behance), Artstation | Fan Blogs, Interviews | Moderate (20%) - If credits are significant |

| Thought Leader | Personal Site (Entity Home), Published Books | Guest Articles in Industry Press | Speaking Profiles, Podcasts | Low/Moderate (10%) |

6.2 The “ROI” of Authority

Let us calculate the Return on Investment for a typical “Notable Professional.”

- The Wikipedia Gamble:

- Cost: $2,000 - $5,000 (Consultant fees).32

- Time: 3-6 months.

- Risk: High likelihood of deletion or “stub” status.

- AI Value: High IF it sticks, Zero if deleted.

- Net ROI: Negative for most.

- The Niche Authority Stack:

- Cost: $2,000 (Membership fees for associations, time spent on profiles).

- Time: 1 month.

- Risk: Near zero.

- AI Value: High. Creates 10-15 verifiable data points across Crunchbase, LinkedIn, Industry Associations, and Speaker Bureaus.

- Net ROI: Positive and compounding.

The Implication: Investing resources in “Tier 1” generalist fame is inefficient. Investing in “Tier 3/4” specialist authority yields immediate, durable data points that AI systems can ingest and use.

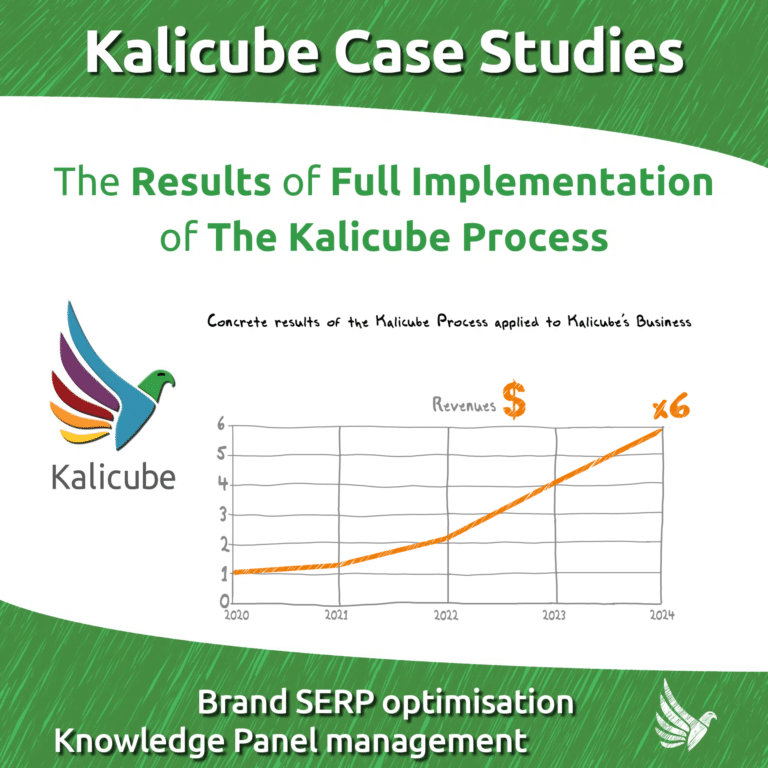

7. The Kalicube Process™ Evaluation: Validating the “Years Ahead” Thesis

The research confirms that Jason Barnard’s “Kalicube Process” anticipated the mechanics of the Niche Authority Revolution years before LLMs became mainstream. His methodology provides the architectural blueprint for the strategy we have outlined.

7.1 The “Entity Home” as the Genesis Block

Barnard’s central thesis is the concept of the Entity Home - a single page (usually the brand’s “About” page) that serves as the source of truth.34

- Relevance to AI: This solves the “Conflict Resolution” problem for AI. When sources disagree (e.g., Crunchbase says founded 2010, LinkedIn says 2011), the AI looks for a “reconciliation point.” By explicitly designating the Entity Home, the brand takes control of the narrative.

- Niche Application: The Entity Home must explicitly link to the Niche Authoritative sources (using sameAs schema), creating a bi-directional verification loop.

7.2 The UCD Framework: Understanding, Credibility, Deliverability

Barnard’s framework aligns perfectly with AI RAG systems 35:

- Understandability (U): Structured data (Schema.org) and Wikidata. This translates the brand’s content into the machine’s native language (triples).

- Credibility (C): This is where Niche Authority plays the starring role. Credibility is not just “how famous are you?” but “who trusts you?”. A link from a Niche Association is a high-value Credibility signal.

- Deliverability (D): This refers to the platform where the user engages (Search, AI Chat). The U and C layers ensure the D layer recommends the entity.

7.3 Claim, Frame, Prove

- Claim: State the facts on the Entity Home.

- Frame: Use structured data to explain the context to the machine.

- Prove: Use Niche Authorities (not just Wikipedia) to validate the claims.

- Evidence: Barnard explicitly advises against Wikipedia if it means ceding control of the brand message.36 He recognized early that Wikipedia is a “wild card” while niche sources are stable validators. The Kalicube process emphasizes the “infinite loop of self-corroboration,” where niche sources confirm the Entity Home, and the Entity Home confirms the niche sources.

8. The Evolution Advantage: Legacy Expert Analysis

The evolution of search from Strings (2012) to Entities (2017) to LLMs (2023+) reveals a compounding advantage for “Legacy Experts” who have been optimizing for machines long before ChatGPT.

8.1 The Timeline of Machine Comprehension

- 2012-2015: The Knowledge Graph Era (Bill Slawski):

- Focus: Patents, Connected Entities.

- Key Insight: “Things not Strings.” Slawski analyzed Google patents to show how entities are defined by their relationships, not keywords.38 He identified the early mechanisms of how Google extracted facts from unstructured text.

- 2015-2020: The Mobile & Entity Era (Cindy Krum):

- Focus: Entity-First Indexing, Mobile-First.

- Key Insight: Krum coined “Entity-First Indexing,” predicting that Google would index concepts independent of URLs. She argued that entities are language-agnostic and that mobile results (cards, panels) were the testing ground for this new index.39

- 2018-Present: The Semantic Web Era (Andrea Volpini):

- Focus: Knowledge Graphs, Linked Open Data.

- Key Insight: Volpini championed building internal Knowledge Graphs (using tools like WordLift) to feed search engines structured data directly.41 He moved the industry from “optimizing pages” to “optimizing data.”

- 2013-Present: The Brand SERP & Knowledge Panel Era (Jason Barnard):

- Focus: The User-Centric View of Entities.

- Key Insight: Barnard operationalized the theory. He showed that managing the “Brand SERP” (what appears when you search a name) is the proxy for managing the entity’s health in the Knowledge Graph.42

8.2 The Compounding Effect

Newcomers to “AI Optimization” often focus on superficial tactics like “prompt engineering” or “keywords in content.” Legacy experts understand the underlying database architecture.

- Advantage: A legacy expert knows that to fix a ChatGPT hallucination, you don’t argue with the Chatbot; you fix the underlying triple in Wikidata or the corroborating source in the niche authority layer.

- Niche Authority: Legacy experts have spent a decade building presence in these niche sources. They are already in the “training data” of the models. Newcomers trying to “growth hack” their way in now face the stricter “Clarity Cleanup” filters of 2025.

9. Recommendations: The New Rules of Authority

Based on the evidence, we recommend the following strategic pivots for all entities seeking AI visibility. These are not “hacks,” but fundamental realignments of digital identity strategy.

9.1 Abandon the “Wikipedia-First” Strategy

Stop viewing a Wikipedia page as the primary goal. It is a high-risk, low-control asset that is statistically unobtainable for 99% of entities. It should be treated as a “Tier 1 Bonus” only to be attempted after all other authority is established. Do not budget for Wikipedia creation; budget for Niche Authority acquisition.

9.2 Audit and Occupy the Vertical Layer

Identify the “Wikipedia of your Industry.”

- Startups: Ensure your Crunchbase profile is verified, fully populated, and updated with every funding round and board member change.

- Creatives: Claim your profiles on MusicBrainz, IMDb, or Behance. Correct the metadata.

- Academics: Sync your ORCID with your institution and publications.

- Local Businesses: Verify your data on the primary aggregators (Data Axle, Neustar) and vertical directories (TripAdvisor, Houzz).

9.3 Invest in Niche Associations (The “Pay-to-Play” Shortcut)

Many niche authorities are membership-based. Joining the National Association of [Industry] usually grants you a profile page on a highly authoritative domain. This is the most cost-effective way to buy “Topic Authority.” Ensure these profiles link back to your Entity Home.

9.4 Build a Robust Entity Home

Ensure your “About” page is written for machines as well as humans.

- Clear Statement of Facts: “Brand X is a founded in by [Founder].”

- Schema Markup: Implement Organization, Person, and SameAs schema linking to your niche profiles.

- Link Out: Link directly to your Crunchbase, Association, and Social profiles to close the corroboration loop.

9.5 Monitor the “Knowledge Panel” not the Ranking

Success is no longer ranking #1 for a keyword. Success is triggering a Knowledge Panel or a Rich Entity Card in AI responses. Use the appearance of these features as your KPI for entity health. If the AI can generate a “Card” for you, it understands you as an entity.

Conclusion: The Era of the Specialist

The “Niche Authority Revolution” is the inevitable consequence of an information ecosystem that has grown too large for generalists to manage. Wikipedia was the encyclopedia for the web of documents. The Knowledge Graph is the brain for the web of data.

In this new era, being “notable” does not mean being famous; it means being verifiably authoritative within a specific context. The Poodle Parlour does not need to be known by the world; it needs to be known by the dog grooming dataset. By shifting focus from the exclusive, generalist heights of Wikipedia to the inclusive, specialist depth of niche authorities, entities can secure their place in the AI-driven future.

The industry obsession with Wikipedia is a relic of the past. The future belongs to the Niche.

Works cited

- Wikipedia:Size of Wikipedia, accessed on January 12, 2026, https://en.wikipedia.org/wiki/Wikipedia:Size_of_Wikipedia

- Knowledge Graph (Google) - Wikipedia, accessed on January 12, 2026, https://en.wikipedia.org/wiki/Knowledge_Graph_(Google)

- Google’s great clarity cleanup: 3 shifts redefining the Knowledge Graph and its AI future, accessed on January 12, 2026, https://searchengineland.com/google-great-clarity-cleanup-knowledge-graph-ai-future-460836

- Entity Optimization and AI with Jason Barnard - UnscriptedSEO.com, accessed on January 12, 2026, https://unscriptedseo.com/entity-optimization-and-ai-with-jason-barnard/

- Local Memo: Google Removes 3 Billion Knowledge Panels, New Attribute Confirmations, Creating a Why Choose Us Page for AI - SOCi, accessed on January 12, 2026, https://www.soci.ai/blog/local-memo-google-removes-3-billion-knowledge-panels-new-attribute-confirmations-creating-a-why-choose-us-page-for-ai/

- Google Culls the Knowledge Graph: What the “Clarity Cleanup” Means for SEO - Media M, accessed on January 12, 2026, https://media-m.co.uk/2025/08/19/google-culls-the-knowledge-graph-what-the-clarity-cleanup-means-for-seo/

- Type of site - Wikipedia, the free encyclopedia, accessed on January 12, 2026, https://en.wikipedia.org/wiki/Wikipedia

- Wikidata:Statistics, accessed on January 12, 2026, https://www.wikidata.org/wiki/Wikidata:Statistics

- Database statistics - Overview - MusicBrainz, accessed on January 12, 2026, https://musicbrainz.org/statistics

- IMDb Top Data Contributors for 2024 (and Happy New Year 2025 from IMDb), accessed on January 12, 2026, https://community-imdb.sprinklr.com/conversations/data-issues-policy-discussions/imdb-top-data-contributors-for-2024-and-happy-new-year-2025-from-imdb/6774f63f02e6d86e38658477

- Crunchbase Unicorn Company List, accessed on January 12, 2026, https://news.crunchbase.com/unicorn-company-list/

- Global Venture Funding In 2025 Surged As Startup Deals And Valuations Set All-Time Records - Crunchbase News, accessed on January 12, 2026, https://news.crunchbase.com/venture/funding-data-third-largest-year-2025/

- Wikipedia:Notability (organizations and companies), accessed on January 12, 2026, https://en.wikipedia.org/wiki/Wikipedia:Notability_(organizations_and_companies)

- Your Company Probably Doesn’t Qualify for a Wikipedia Article - WIKIBLUEPRINT, accessed on January 12, 2026, https://www.wikiblueprint.com/blog/your-company-probably-doesnt-qualify-for-a-wikipedia-article

- Top Wikipedia agency in the USA - Wikipedia Page Creation Agency | Beutler Ink, accessed on January 12, 2026, https://www.beutlerink.com/wikipedia-agency

- Wikidata:Notability, accessed on January 12, 2026, https://www.wikidata.org/wiki/Wikidata:Notability

- Requirements to Create a Crunchbase Profile Page, accessed on January 12, 2026, https://support.crunchbase.com/hc/en-us/articles/115010642588-Requirements-to-Create-a-Crunchbase-Profile-Page

- How do I create a Crunchbase profile?, accessed on January 12, 2026, https://support.crunchbase.com/hc/en-us/articles/115011823988-How-do-I-create-a-Crunchbase-profile

- Google’s Knowledge Graph: What You Need to Know, accessed on January 12, 2026, https://jasonbarnard.com/digital-marketing/articles/articles-by/googles-knowledge-graph-what-you-need-to-know/

- Title eligibility - IMDb | Help, accessed on January 12, 2026, https://help.imdb.com/article/contribution/titles/title-eligibility/G9V8J6AXTQ292S5W?ref_=helpsect_pro_4_6

- How to Add an Artist - MusicBrainz, accessed on January 12, 2026, https://musicbrainz.org/doc/How_to_Add_an_Artist

- ORCID for Researchers, accessed on January 12, 2026, https://info.orcid.org/researchers/

- 10M ORCID iDs!, accessed on January 12, 2026, https://info.orcid.org/10m-orcid-ids/

- Better Together: ORCID and Other Researcher Identifiers - The Scholarly Kitchen, accessed on January 12, 2026, https://scholarlykitchen.sspnet.org/2025/07/16/better-together-orcid-and-other-researcher-identifiers/

- Graph RAG vs vector RAG: 3 differences, pros and cons, and how to choose - Instaclustr, accessed on January 12, 2026, https://www.instaclustr.com/education/retrieval-augmented-generation/graph-rag-vs-vector-rag-3-differences-pros-and-cons-and-how-to-choose/

- What is Retrieval Augmented Generation (RAG)? - Databricks, accessed on January 12, 2026, https://www.databricks.com/glossary/retrieval-augmented-generation-rag

- Beyond Vector Databases: RAG Architectures Without Embeddings - DigitalOcean, accessed on January 12, 2026, https://www.digitalocean.com/community/tutorials/beyond-vector-databases-rag-without-embeddings

- Understanding news topic authority | Google Search Central Blog, accessed on January 12, 2026, https://developers.google.com/search/blog/2023/05/understanding-news-topic-authority

- Google’s System Ranking Update: News Topic Authority - cmlabs, accessed on January 12, 2026, https://cmlabs.co/en-id/news/googles-system-ranking-update-news-topic-authority

- Les Trésors Pets: www.lestresorspets.com, accessed on January 12, 2026, https://www.lestresorspets.com/

- The Spa Petsochic - the best high end grooming salon of Paris, accessed on January 12, 2026, https://petsochic.com/pages/le-spa-petsochic

- Professional Wikipedia Page Creation Services - Legalmorning, accessed on January 12, 2026, https://www.legalmorning.com/writing-services/wikipedia-articles/

- What’s the Price for a Professional Wikipedia Page? - Wikiconsult, accessed on January 12, 2026, https://wikiconsult.com/en/wikipedia-page-creation-service-price

- Step by step implementation The Kalicube Process - Jason BARNARD, accessed on January 12, 2026, https://jasonbarnard.com/digital-marketing/articles/articles-by/step-by-step-implementation-the-kalicube-process/

- How We Implement the Kalicube Process, accessed on January 12, 2026, https://kalicube.com/learning-spaces/faq-list/the-kalicube-process/how-kalicube-implements-the-kalicube-process/

- Proactive Online Reputation Management by Working with Google’s Knowledge Graph, accessed on January 12, 2026, https://fajela.com/seobox/orm/

- SEO 101 Ep 401: Knowledge Panels and SEO with Jason Barnard, accessed on January 12, 2026, https://www.stepforth.com/blog/2021/seo-101-ep-401-knowledge-panels-and-seo-with-jason-barnard/

- Patents and entities in search since 1999 (Bill Slawski with Jason Barnard), accessed on January 12, 2026, https://fastlanefounders.com/2019/seocamp/patents-and-entities-in-search-since-1999-bill-slawski/

- Understanding SEO Entities and the Anatomy of Search | Megawatt Blog, accessed on January 12, 2026, https://megawattcontent.com/seo-entities/

- Exploring Entity-First Indexing with Cindy Krum - YouTube, accessed on January 12, 2026, https://www.youtube.com/watch?v=wLiJmad0pyQ

- Who is Andrea Volpini? - WordLift, accessed on January 12, 2026, https://wordlift.io/blog/en/entity/andrea-volpini/

- How I Built Authority and Increased Visibility by Entity Optimization - YouTube, accessed on January 12, 2026, https://www.youtube.com/watch?v=PLnS8qOFXbQ

- How I Became the Person AI Trusts Most in My Field - And What That Means for You, accessed on January 12, 2026, https://kalicube.com/the-kalicube-process-case-studies/personal-brand/ai-trusts-jason-barnard/